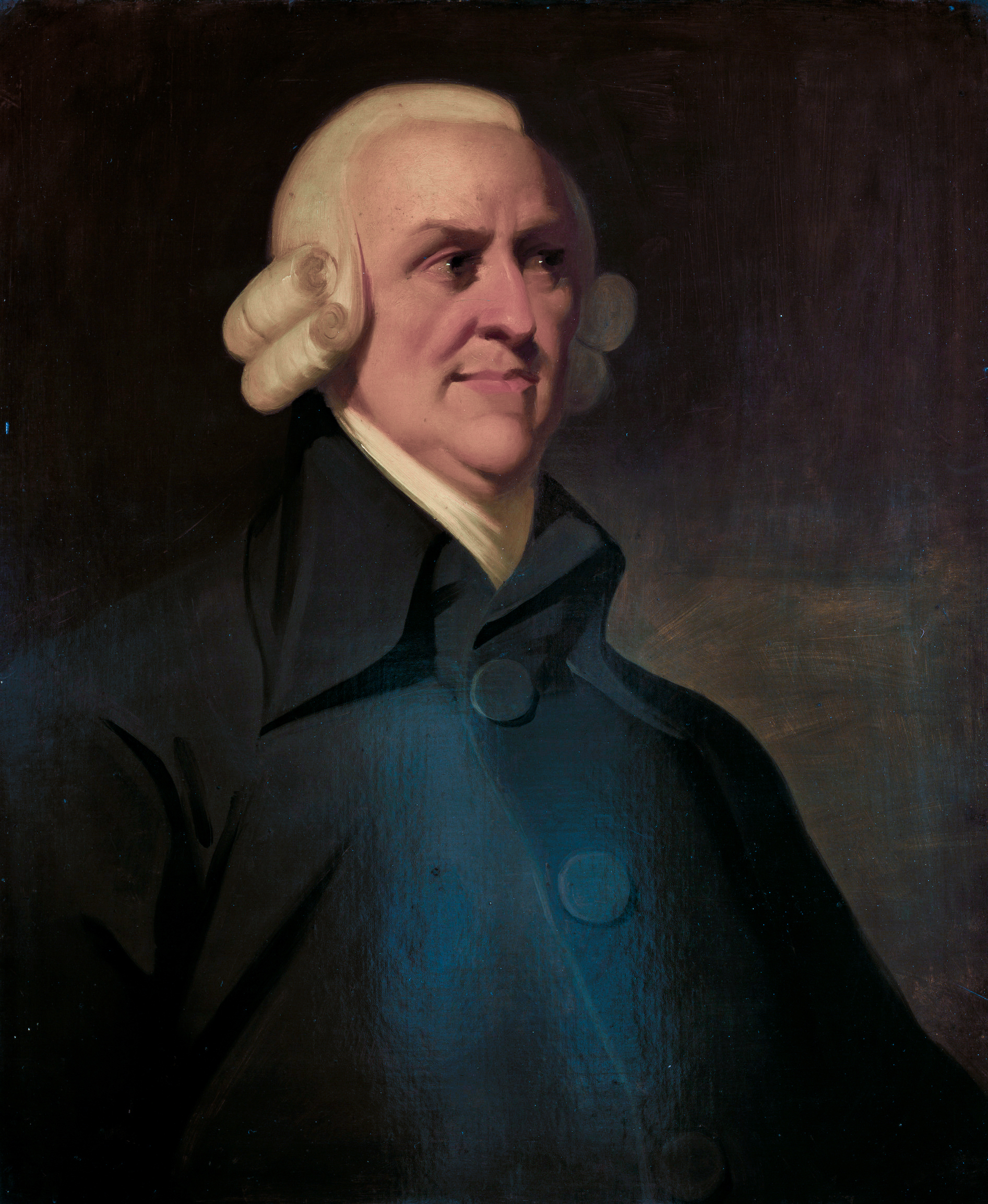

W V O Quine

There are different levels of disagreeing with a theory. To illustrate, we can imagine ourselves the central planner dispensing funds for researchers. The lowest level of disagreement is outright rejection - if a plan for a spaceship begins with "Assume we can violate the laws of thermodynamics..." I would simply not disperse any funds. Above this, there is non-fundamentalness. If I see a numerical simulation for a spaceship engine which I know doesn't respect energy conservation exactly, I might not dismiss it out of hand but would pay for investigation into whether the simulation flaws are fundamental. Off to the side, above rejection and below non-fundamentalness but not between either, there is suspicion. To speak ostensively: WVO Quine's attitude toward Carnap's dogmas was suspicion - he neither thought them to be wastes of paper nor without flaw. The notion of "analyticity" - words being true by definition - seemed both worth investigating and a bad foundation for the reconstruction of scientific investigation.

As Quine was suspicious of an any empiricism that took analyticity as atomic, I am suspicious of the philosophies that in the main call themselves "Bayesianism". What exactly Bayesianism consists of and when it began is not easy to say because self-described Bayesians do not all agree with one another.

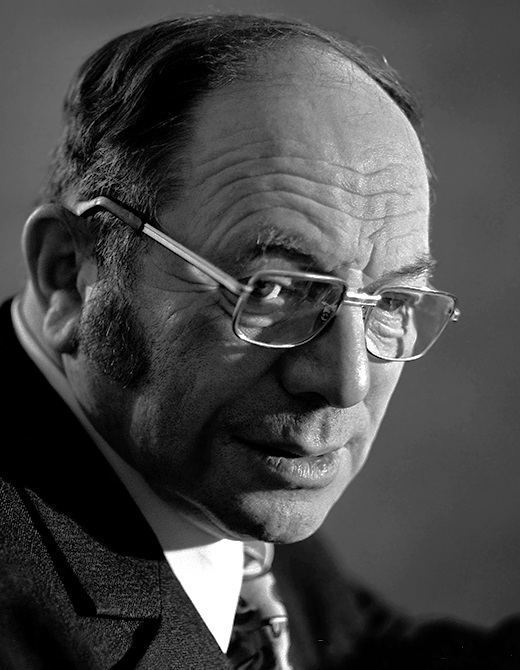

The followers of

de Finetti and

Savage would trace Bayesianism to the logicians

Ramsey and

Wittgenstein. And indeed, whoever you think deserves credit as the

origin

of Bayesian philosophy, one must grant that Ramsey & Wittgenstein

gave unusually clear statements of Bayesian philosophy. In Wittgenstein,

the intuition of Bayesian philosophy were expounded in set of propositions

5.1* of his Tractatus. Ramsey gave these notions a more explicit development paper

Truth And Probability. The particular style of the arguments on pages 19 - 23 of this paper has become known as "Dutch Book Arguments".

The general concept is simple. We start with a Wittgensteinian metaphysics: each possible state of the world corresponds to some proposition p. The propositions can be "arranged in a series" - there is an

ordering Rpq such that

there is an isomorphism between the propositions and the real numbers that respects the probability calculus. This ordering is something like "q is at least as good as p". Further, Ramsey realized, the relation R comes pretty near to an intuitive idea of rational behavior. And even more interesting is that the converse also holds (or nearly holds): "If anyone's mental condition violated these laws ... [he] could have a

book made against him by a cunning bett[o]r and would then stand to lose in any event". This philosophical interpretation of two way equivalence between orderings on propositions and probability calculus is what I call the first dogma of Bayesianism.

Many other Bayesians - such as the followers of

Jaynes - would trace it to the great physicists

Laplace and

Gibbs. The Bayesianism of early physicists is, granting that it exists at all, implicit and by example. Gibbs asked us to imagine a large collection of experiments floating in idea space. We should expect* that our actual experiment could be any one of those experiments, the great mass of which are functionally identical. Consider a classical ideal gas held in a stiff container. In the ensemble of possible experiments, there are a few where the gas is entirely in the lower half of the bottle. But the great mass of possible experiments the gas has had time to spread through the bottle. Therefore, for the great mass of possibilities, the volume the gas occupies would be the volume of the bottle. This means that if we measure the temperature, we get the pressure for free. Using

Iverson Brackets, we see that for each possible temperature t and pressure p, Prob(P=p|T=t) = [p =(nR/V)t] or near abouts. The information we get out of measuring the temperature - that is, putting a probability distribution on temperature - is a probability distribution on pressure. The lesson the Gibbs example teaches ostensively is - supposedly - that what we want out of an experiment is a "

posterior probability". This is the second dogma of Bayesianism.

Both dogmas are open to question. Let us question them.

It is almost too easy to pick on the Dutch Book concept. Ramsey himself expresses a great deal of skepticism about the general argument - "I have not worked out the mathematical logic of this in detail, because this would, I think, be rather like working out to seven places of decimals a result only valid to two.". Ramsey even gives a cogent criticism of the application of Dutch Book Argument (one foolishly tossed aside as ignorable by Nozick in

The Nature Of Rationality):

"The old-established way of measuring a person's belief is to propose a bet, and see what are the lowest odds which he will accept. This method I regard as fundamentally sound; but it suffers from being insufficiently general, and from being necessarily inexact. It is inexact partly because of the diminishing marginal utility of money, partly because the person may have a special eagerness or reluctance to bet, because he either enjoys or dislikes excitement or for any other reason, e.g. to make a book."

The assumption "Value Is Linear Over Money" implies the Ramsey - von Neumann - Morgenstern axioms easily, but it is also a false empirical proposition. Defining a behavioristic theory whose in terms of behavior towards non-existent objects is the height of folly. Money doesn't stop becoming money because you are using it in a Bayesian probability example.

This brings us to the deeper problem of rationality in non-monetary societies. Rationality was supposed to reduce the probability calculus to something more basic. But now it seems to imply rationality was invented with coinage. Did rationality change when we (who is this 'we'?) went off the gold standard? These are absurd implications but phrasing a theory of rationality in terms of money seems to imply them. What about Dawkins' "

Selfish Gene", isn't its rational pursuit of "self-interest" (in abstract terms) one of the deep facts about it? Surely this is a theory that might be right or wrong, not a theory a priori wrong and not a theory a priori wrong because genes don't care about money.

Related to this is that Dutch Book hypothesis seems to accept that people are "irrationally irrational" - they take their posted odds far too literally. As phrased in the Stanford Encyclopedia Of Philosophy article on

Dutch Books

"An incoherent agent might not be confronted by a clever bookie who could, or would, take advantage of her, perhaps because she can take effective measures to avoid such folk. Even if so confronted, the agent can always prevent a sure loss by simply refusing to bet."

This argument is clarified by thinking in evolutionary terms. Let there be three kinds of birds: blue jays, cuckoos and dodos. Further assume that we've solved the problem from two paragraphs ago and have an understanding of what it means for an animal to bet. Dodos are trusting and give 110%. Dodos will offer and accept some bets that don't conform to the probability calculus (and fair bets). Cuckoos - as is well known - are underhanded and will cheat a dodo if it can. They will offer but never accept bets that don't conform to the probability calculus (and accept fair bets). Blue jays are rational. They accept and offer only fair bets. Assuming spatially mixed populations there are seven distinct cases: Dodos only, Blue Jays only, Cuckoos only, Dodo/Blue Jay, Dodo/Cuckoo and Dodo/Cuckoo/Blue Jay. Contrary to naive Bayesian theory, each of the pure cases is stable. Dodo-dodo interaction is a wash, every unfair bet lost is an unfair bet won by a dodo. Pure blue jay and pure cuckoo interactions are identical. Dodo/blue jay interactions may come out favorably to the dodo but never to the blue jay, the dodos can do favors for each other but the blue jays can neither offer nor accept favors from dodos or blue jays. The dodo is weakly evolutionarily dominant over the blue jay. Dodo/cuckoo interaction can only work out in the cuckoo's favor, but contrariwise a dodo can do a favor for a dodo but a cuckoo cannot offer a favor to a cuckoo (though it can accept such an offer). We'll call this a wash. Cuckoo/blue jay interaction is identical to cuckoo/cuckoo and blue jay/blue jay interaction, so neither can drive the other out. Finally, a total mix is unstable - dodos can drive out blue jays. The strong Bayesian blue jay is on the bottom. Introducing space (a la Skyrms) makes the problem even more interesting. One can easily imagine an inner ring of dodos pushing an ever expanding ring of blue jays shielding them from the cuckoos. There are plenty of senses of the word "stable" under which such a ring system is stable.

What does the above analysis tell us? We called Dodo/Cuckoo interactions a wash, but it really depends on the set of irrational offers Dodos and Cuckoos accept & reject/offer. As Ramsey says, for a young male Dodo "choice ... depend[s] on the precise form in which the options were offered him...". Ramsey finds this "absurd.". But to avoid this by assuming that we are living in a world which is functionally only blue jays seems an unforced restriction.

The logic underlying Bayesian theory itself is impoverished. It is a propositional logic lacking even

monadic predicates (I can point to

Jaynes as an example of Bayesian theory not going beyond propositional logic). This makes Bayesian logic less expressive than Aristotlean logic!

That's no good!

Such a primitive logic has a difficult time with sentences which are "infinite" - have countably many terms - or even just have infinite representations. (An example infinite representation of the rational number 1/4 is a lazily evaluated list that spits out [0, ., 2, 5, 0, 0, ...]) Many Bayesians, such as Savage and de Finetti, are suspicious of infinite combinations of propositions for more-or-less the same reasons Wittgenstein was. Others, such as Jaynes and Jeffery, are confident that infinite combinations are allowed because to suppose otherwise would make every outcome depend on how one represented it. Even if one accepts infinite combinations, there also is the (related?) problem of sentences which would take infinitely many observations to justify.

An example of a simple finite sentence that takes infinitely many experiments to test is "A particular agent is Bayesian rational.". This is a monadic sentence that isn't in propositional logic. Unless one tests every possible combination of logical atoms, there's no way of telling the next one is out of place. The non-"Bayes observability" of Bayes rationality has inspired an enormous amount of commentary from people like Quine and Donald Davidson who accept the Wittgensteinian logical behaviorism that Bayesianism is founded upon.

So, is this literature confused or getting at something deeper? I don't know. But I believe that one can now see the reason Dutch Book arguments are hard to knock down isn't because there aren't plenty of criticisms. The real reason is the criticisms don't seem to go to the core of the theory. A criticism of Dutch Book needs to be like a statistical mechanics criticism of thermodynamics - it must explain both what the Dutch Book gets as well as what it misses. There does seem to be something there which will survive.

What You Want Is The Posterior

Okay okay okay, be that as it may, isn't it the case that what we want is the posterior odds? If D is some description of the world and E is some evidence we know to be actual, then we want P(D|E), right? Well, hold your horses. I say there's the probability of a description given evidence and probability of the truth of a theory and never the twain shall meet. To see it, let's meet our old uncle Noam.

Chomsky was talking about other people. Who he thought he was talking about isn't important, but he was really talking about

Andrey Markov and

Claude Shannon. Andrey Markov developed

a crude mathematical description of poetry. He could write a machine with two states "print a vowel" and "print a consonant". He could give a probability for transferring between those states - given that you just printed a vowel/consonant, what is the probability you will print a consonant/vowel? The result will be nonsense, but look astonishingly like Russian. You can get more and more Russian like words just by increasing the state space. Our friend Claude Shannon

comes in and tells us "Look, in each language there are finitely many words. Otherwise, language would be unlearnable. Grammar is just a grouping of words - nouns, verbs, adjectives, adverbs. By grouping the words and drawing connections between them in the right way we can get astonishingly English looking sentences pretty quickly.".

Now our "friend" Chomsky. He says "Look, you have English looking strings. They're syntactically correct. But you don't have English! The strings are not

semantically constrained. There is no way to write a non-trivial machine that both passes Shakespeare and fails a nonsense sentence like 'Colorless green ideas sleep furiously.'! In order to know that sentence fails the machine needs to know ideas can't sleep, ideas can't be green, green things can't be colorless and one cannot sleep in a furious manner.".

Now, this is a scientific debate about models for language. Can it be put in a Bayesian manner? It cannot. The Markov-Shannon machine - by construction - matches the probabilistic behavior of the strings of the language. But it cannot be completely correct (otherwise,

the set of regular expressions would be the Turing complete languages). Therefore, the statement that "What we want is the posterior odds!" cannot be entirely true.

I have said before that I am in the "Let Ten Thousand Flowers Bloom" school of probability. I think these arguments are interesting and worth considering. But it is also clear that despite books like Nozick's

The Nature Of Rationality and Jaynes' similar tome, Bayesian philosophy does not replace the complicated mysteries of probability theories with simple clarities.

There is a further criticism that applying behavioristic analysis to research papers is unwise but I will make that another day.

* Both Ramsey's paper and Gibbs' book assume that the expectation operator exists for every relevant probability distribution. This is a minor flaw that can be removed without difficulty, so I will not mention it again.